Due to changes in Q4 and Q5 of the academic year calendar there is the need for finding new balance between time pressure and quality in assessment and education. On this page you will find an overview of the changes and the challenges related to assessment, as well as measures and ideas to face these challenges which are mostly related to minimize the grading time of the assessment.

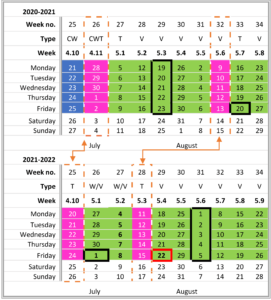

As of 2021/2022, the academic calendar has been changed. The changes are summarized in the figure and the table below.

- Purple: exam week

- Black box: deadline for handing in all grades;

- Red box: deadline for handing in grades for first year bachelor courses.

| Change | Challenge and deadline | Explanation |

| Shorter education period Q4 (week 4.11 is removed) | Cover all learning objectives (LOs) with fewer teaching and learning activities | Student workload should be feasible, and programme outcomes (ILOs) should be met by LOs |

| Earlier resits/additions (week 5.6 → 5.3) | Grade all Q4 assessments before 1 July 2022 (Friday week 5.1) | Students need to plan their resits one week before the first exam. 1 July 2022 is the final deadline for communication of all Q4 grades via OSIRIS, regardless of assessment type |

| Students need to be able to inspect their assessed work and discuss their grades with an examiner between 4-8 July 2022 (week 5.2) | Give students feedback before the resit, and enable students to withdraw from the exam in time if they pass the course after the discussion (withdrawal can be done until 3 calendar days before the exam, see TER art. 20 and TER art. 21) | |

| Shorter grading period for 1st year bachelor courses | 1st year Bachelor courses: grade all (Q5) assessment before 22 July 2022 (week 5.4) | Students receive their BSA in the beginning of August. Therefore, all grades from the first year need to be collected before 22 July (beginning of August). |

| All other courses: grade all Q5 assessments before 1-5 Aug 2022 (week 5.6) | Deadline for communication of Q5 grades via OSIRIS remains 15 working days after the date or deadline. |

The challenge is to cover all LOs with fewer teaching and learning activities in less time. Some activities may be combined or skipped. Please keep in mind that these activities should be constructively aligned with the learning objectives and assessment, and that the learning activities should stimulate active learning.

Changes to LOs only permitted after approval Director of Studies and Board of Studies. More ideas on how to tackle this will be made available at a later time.

For all assessments, adhere to the following general quality requirements:

| Validity | Reliability | Transparency | Feasibility | Stimulate learning |

|

|

|

|

|

Specifically for digital exams, the following requirements must also be met:

- Students need to practice with the assessment tools & question type: Students (and you) need to practice with both the question types and the assessment tools before the exam.

- Arrange for technical support for your students and yourself during the exam.

Face the grading challenges

The following measures can help you to minimise the time between the assessment and publishing the grade. The measures are divided into ideas that are relevant while constructing the questions and the exam/assignment, and ideas on optimising the grading process.

Optimise the grading process

- Organise a second examiner who can take over if you happen to be unavailable during the course, exam or grading. Make sure they have access to all of your material. This is especially relevant during the pandemic. Share the following documents with your colleague:

- Exam / assignment

- Answer template

- Answer model / rubric / assessment sheet

- Find teaching assistants (TAs) either via ESA in your faculty (e.g. TPM) or contract them via your department, early in the academic year (otherwise, they might have other plans). Train, supervise and exchange experience amongst TAs to ensure good quality and efficient grading and to update the answer model on the go. Your faculty can arrange a blended training for your TAs.

- Fully organize and prepare the grading of the exam/assignment as well as the resit/addition. Organize help for the process long before TAs and colleagues plan their holidays.

Optimise the questions, the exam/assignment, and the use of digital assessment tools

| What | Why is this faster? | Warnings/examples |

| Divide large questions into multiple smaller questions | Answers to short questions are more uniform and therefore faster to grade | Warning: Make sure that the answers of ‘analyse’ and ‘evaluate’ level questions are not unveiled by the follow-up questions: Students might not need the analyse-level of Bloom to determine the steps, since students receive hints on the steps by the a-b-c-d-subquestions |

| Prevent continuation errors (doorrekenfouten) in follow-up (sub)questions | Prevents checking calculations/answers from students in follow-up questions with incorrect numbers | How: In case of subquestions that can result in continuation errors, ask students to continue working with a specific (different) value for each sub-question. Make sure that this value is not the answer to the previous subquestion. |

| Limit the answer length | Less words to read |

|

| Provide scrap paper | Easier to read/follow since less texts/graphs have strike-through parts |

|

| Instruct students to use a given template for their answer | You know where to look. |

Examples:

|

| Have students type the answers | Reduces time loss due to legibility issues | Warning: It might take students more time to type their answers than to write. Example: Mathematical questions or questions in which students have to use many symbols |

| In case of handwritten exams: instruct students to write from top to bottom. | Easier to read the answers. | |

| In case of numerical questions: instruct students to write their developments of questions as a formula, before filling in the given/calculated values of the variables in the formula | This will make it easier to spot errors. | Warning: You will need to have students practice with this during the course, and give them feedback, too. Furthermore, inform students about this before and during the exam. |

| In case of open-ended problems with multiple solution routes: ask students which solution strategy they took, and divide the options over graders. | It is faster to grade answers that used the same solution route. |

How:

|

| Use automatically gradable question types | It is faster to have software grade the questions automatically |

Examples of question types that can be graded automatically:

Downside:

The following tools support automatically graded questions:

|

Negative effects of automatic grading

Using digital assessment tools to grade answers automatically decreases the time to grade considerably. However, automatic grading decreases the reliability of the grade:

- If exams are scored automatically, you have no insight into why students ended up with an incorrect or correct answers, and therefore you have less indications for whether questions contained errors or unclarities. In manually graded questions in which students explain their answers, unclarities or errors in the question would become apparent in the given answers.

- It is not fully possible to grant partial points for partially correct answers. As a result, grades will be lower than in manually graded questions. In the latter case, most lecturers would grant partially correct answers with partial points.

- In case of e.g. multiple choice questions, guessing can result in higher grades.

- As with any assessment tool, students might be hindered in their performance by technical problems or lack of practice with the tool.

Measure to mitigate Negative effects of automatic grading

These negative effects can be mitigated by the following measures before, and after the exam:

Before the exam

- Grant partial points for partially correct answers

Give partial points for partially correct answers up. All-or-nothing scoring will reduce the precision of the grade in. - Attribute low weight for all/nothing questions

Questions that are scored with either all or no points, should not have a too high number of points (to enlarge the precision/step size of grades). Split up questions, if necessary. - Closed-ended questions like multiple choice: correct the grade for guessing

In the case of multiple choice questions (or other closed-ended questions), correct for guessing in the grade calculation from the scores. See examples below where grade is the exam grade ϵ [1,10], score is an individual student’s sum of points that they received for their (partially) correct answers in the assessment, guessing_score is the average score for random guessing answer options, and max_score is the maximum score that students can receive by answering all questions fully correct.

-

- Original formula 1: grade = 1 + 9*(score/max_score)

New formula 1: grade = 1 + 9*((score-guessing_score)/(max_score-guessing_score)) - Original formula 2: grade = 10*(score/max_score)

New formula 2: grade = 10*((score-guessing_score)/(max_score-guessing_score))

- Original formula 1: grade = 1 + 9*(score/max_score)

After the exam

Check if you need to correct your answer model:

- Use test result analysis to spot issues in the question / answer model, especially in automatically graded questions. In case a question has a negative/low correlation with the total/the other scores, consider possible causes and change the answer model accordingly. Fix issues for all students. More information on test result analysis, see the UTQ ASSESS reader.

- If students who contact you have valid arguments on why their answer is (partially) correct, grant all students with similar answers full or partial points.

- You could ask students to hand in their elaborations, next to entering their final answer in the assessment tool. That way, you can check what the reason is if some students come up with unexpected answers, and grant all students with similar answers with partial or full points.

Communicate clearly to students whether or not their elaborations will be used to grade their exam, or if you will only use it in specific cases, like described here. You probably do not have time to consider all elaborations in Q4/Q5. - If you change the answer model, make sure that no student should receive a lower grade due to the correction.

If students are allowed to hand in an addition, for example, because they missed a practical or received a low grade for an assignment, schedule this process according to the deadlines:

- Communicate the grades and the assignment for the students who are eligible for the addition on 1 July (or earlier). If the addition is an extra assignment, make sure that it is available in time to the students.

- Set the deadline for the addition in week 5.3 (e.g., Friday 15 July).

- In the case of first-year bachelor students, communicate the grades via Osiris on the 22nd of July (or earlier). Deadline in all other cases: 15 working days after the deadline, no later than 20 August.

Possible larger course changes for next years (as of 2022-2023)

The change of the academic calendar is permanent. Next year you may want to make larger course changes and below there are two changes suggested. These changes require permission from the programme director and the Board of Studies. Please keep in mind that in most cases a new course code and a transition regulation are needed. In addition, adjustments to the assessment also require changes in the study guide. Contact your ESA department for the timeline and templates for course changes in your faculty. As a rule of thumb, changes for courses in the next academic year need to be proposed to your educational committee (Board of Studies) by your programme director (Director of Studies) in February.

- Split an exam in two

- Why? Less grading work at the end of the period.

- Why not? It increases the number of assessments, which might be against faculty policy since this can increase the workload for both students and lecturers.

- Change the type of assessment

- Why? Changing individual work to group work could reduce grading workload.

- Why not? It depends on the LOs if this is desirable. Also it influences the assessment on programme level: are all LOs still assessed individually?